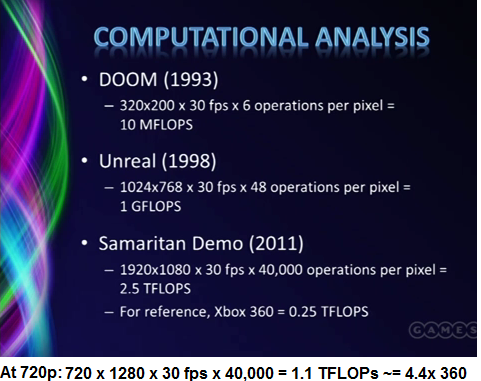

The Wii U can, in theory, run the Samaritan demo.

#22

Posted 13 February 2012 - 06:44 PM

I DONT DINK DOSE R POLICE MENSI LIEKED TEH PART WHERE HE BEAT TEH CRAP OUT OF TEH POLICEMEN!

- SleepyGuyy likes this

#23

Posted 14 February 2012 - 03:25 AM

Edited by The Lonely Koopa, 14 February 2012 - 03:25 AM.

#25

Posted 16 February 2012 - 11:14 AM

Dude....what....

A 4870 would NOT make Wii U 12x more powerful than 360! A 12x jump expectation for WIi U is completely unrealistic! It's even unrealistic for PS4/720.

See that? It takes a GPU with 2.5 TFLOPS to run Samaritan at 1080p 30fps.

We'd be lucky to get such GPUs from even PS4/720 in 2013.

It takes 4.4 times the power of 360 to run Samaritan in 720p and since PS4 & 720 will be no doubt be more powerful than that when they launch in 2013 (expecting 6 -8 x more pwoerful) they can run Samaritan at 720p all effects.

Wii U is expected to be 3 - 5x 360, so either it can run it at 720p reduced effects or sub-hd.

Don't get people's hopes up with false information.

Yes, 2.5 TFOPS, ON PC HARDWARE. PC's require much stronger GPU's and CPU's than consoles do to run to SAME games, I'm sure you know this. And like I said, everything I'm going off is pure speculation and based off of unconfirmed rumors. So I'm not purposely trying to give false hope, and it's highly possible that I could be wrong. I made that clear in my post.

Consoles aren't optimized for gaming either, they're optimized for cost, if that weren't the case all consoles would have 3x the amount of RAM they have now.

Looking back on it the Wii U has a chance of running this game, but this demo in particular has a much, much higher reliance on GPUCompute than any consumer program before this, not something ATI does quite as well as NVidia.

Yea they are lol. They're GAME systems bro, they are literally MADE for gaming, unlike PC's. That's why when Sony, Nintendo, and Microsoft make a console, the CPU's and GPU's they use are all BASED off of Pc processors. Because they optimize them and customize them. That's why consoles can run the same games PC's can run, but on weaker hardware. Because their optimized for games.

As for RAM, console don't require nearly as much as RAM to play game as PC's do. Consoles can do so much with so little RAM.

Edited by Shokio, 16 February 2012 - 11:16 AM.

- SleepyGuyy and Crackkat like this

#26

Posted 18 February 2012 - 02:23 PM

Consoles aren't optimized for gaming either, they're optimized for cost, if that weren't the case all consoles would have 3x the amount of RAM they have now.

Looking back on it the Wii U has a chance of running this game, but this demo in particular has a much, much higher reliance on GPUCompute than any consumer program before this, not something ATI does quite as well as NVidia.

Wait, WHAT!!??

Mate, just re-read what you just said there.

"Consoles aren't optimised for gaming"

Then that defeats it's entire purpose of being a gaming system.

"They're optimised for cost".

"$599 U.S Dollars"

Signature by Cerberuz

#27

Posted 18 February 2012 - 03:07 PM

Edited by BazzDropperz, 14 July 2014 - 01:02 AM.

#28

Posted 19 February 2012 - 08:24 AM

If the Wii U does have a chip with similar HP to the 4870 then that would make it some 5 times faster. Also there is little chance that MS will use a 6670 in the next Xbox. The recently released 7770 would cost MS about $30 per chip and be 70% faster than a 6670- there is no way that a company with unlimited cash is going to go cheap on a GPU. Expect the next gen Xbox to use something similar to a 7850 which will be a a little slower than the current 6950.

Finally... it's unlikely that the Wii U will be as powerful as a 4870 due to the amount of heat it and a CPU required to run it would require. One of the only bits of solid info that we were given is that the CPU would be 45nm which is very very old tech, Intel were on 45nm 5 years ago. In most likely it will be 50% faster than the current Xbox.

#29

Posted 19 February 2012 - 08:48 AM

#30

Posted 19 February 2012 - 11:30 AM

Die size has nothing to do with how old the tech is Medu, the IBM Power 7 which the Wii U will be using a variation of will be 45nm, and that came out in 2010, not only that, although they may be similar, and I've lost count as to how many times I've said this in the forum. CONSOLES DON'T USE PC GPUs, the GPU will be small enough and the Wii U will be designed so the chipset doesn't overheat and melt.

Die size and process nodes are too totally different things. Every ~2 years we get a doubling of transistors for the same die space which cuts cost, or allows more complex chips OR chips that run at higher frequencies. The fact that Nintendo are using an outdated process node suggests that are not going to be very aggressive with the hardware in the Wii U.

The original Xbox used a PC GPU, as did the PS3- slightly altered but basically PC chips. Anyone that uses an AMD chip for the next gen will be using a PC based chip, or a chip design that will soon be used in a PC thereafter.

Edited by Medu, 19 February 2012 - 11:30 AM.

#31

Posted 19 February 2012 - 12:08 PM

The original Xbox used a PC GPU, as did the PS3- slightly altered but basically PC chips. Anyone that uses an AMD chip for the next gen will be using a PC based chip, or a chip design that will soon be used in a PC thereafter.

Yeah, that's the point, it's PC BASED, not a PC GPU, they can customize it so that it doesn't melt.

Simple as that.

- InsaneLaw likes this

#32

Posted 19 February 2012 - 01:57 PM

Yeah, that's the point, it's PC BASED, not a PC GPU, they can customize it so that it doesn't melt.

Simple as that.

And that they do that making them slower. There are a few things they can do to increase performance per watt but it doesn't make a huge difference. At the high end the Wii U is looking at a 6670 spec chip which might be where that Xbox rumour came from.

#33

Posted 19 February 2012 - 03:46 PM

For example, if you compare an apple mac (not in video games, but OS and graphic applications) with a windows PC, the mac needs the half hardware and performes better than a pc with much much better hardware! Its because the OS, dlls, etc.. (this is changed now, as apple does not support nvidia, as they use a cpu by intel, that Nvidia claims it in the courts. And apple is not cooparating with nvidia anymore).

consoles are ---> hardware - screen

macs are --- hardware - os - keyboard - screen

pcs are --- hardware, os-dll - keyboard - hardware - dll - screen.. (the map is not correct, but its about that).. That’s why pc users turn into lynux or hackidosh. (hackidosh is a pc with mac OS). Another example is my video card gtx480, its much more powerful in hardware than a gtx580, they cut off 1 billion transistors from gtx480 and created gtx580s!!! to be more light and cooler. (thats why it performs better, because of hit and oc!). Also, all the pro video cards that cost us 7000 dollars or Euros.. are equal or less powerful than a gtx480, they just have the drivers that makes the difference. Its a marketing thing.

Another example is 7xxx series video cards, 4way crossfire performe 380-520 fps in battlefield 3 1080p!! And now that keplers are comming out, the lower models gtx 660s and 660 ti's will performe higher than that!!! Its just craps and marketing.. the today consoles perform 30 fps @720p, thats why they look like washed out textures some times, because they strech them out, to 1080p!

I ve read that wii U performs 60 fps at 1080p, if thats true, Its good news!!! Let me state it differently, there is none pc gamer with xbox360 or ps3 tech in pc that plays games today. But there is millions of pc gamers with wii U tech pc's, even less than that! We just need wii U to be powerfull enough for 720 and ps4.. thats all.

Edited by Orion, 19 February 2012 - 04:04 PM.

- Hinkik likes this

#34

Posted 20 February 2012 - 08:12 AM

#35

Posted 21 February 2012 - 01:18 PM

They cut them off, in the half.. do you want me to give you a link about it? hold on.. is going to be old though, as its not a fresh news.. This happened for many reasons, even TESLA said to nvidia, NOT to give in a video gaming card such a powerful computing capabilities, because the pro cards, didn’t sell at all!!! A gtx480 is much better in 3d, if we optimize it (with quadro drivers, etc), than a quadro itself..

http://www.guru3d.co...-functionality/

It has more cuda cores 512, but less transistors. And I think, that the new keplers, are even less, for less power and heat, but they do have more cuda cores, 768 (about that, the small model!! gtx660)

So basically, 480 cuda cores = 3.2 billion transistors and gtx580 512 cuda cores 3.0b.. Maybe thats why there are plenty of sites, that claim, gtx480 performs better in cuda, because theoretically its more powerful, as gtx 580 has slower computing do to less transistors (?). Gtx 580 performs 15% - 20% better in games because of less heat and slightly less power consumption. Not because its better.

Nvidia with a very fast way, vanished gtx480 from the market, there is reason for it! To be honest I bought my gtx480 for photoshop and some designing, and now I may buy keplers for my gaming!! except if keplers perform better in 3d and photography (i doubt about it). If you search 3d benchmarks (not demos), you will not going to find 5xx series near quadros or 4xx series performance.. At least, I havent till now..

Edited by Orion, 21 February 2012 - 01:54 PM.

#36

Posted 21 February 2012 - 02:21 PM

#37

Posted 26 February 2012 - 06:31 AM

Wait, WHAT!!??

Mate, just re-read what you just said there.

"Consoles aren't optimised for gaming"

Then that defeats it's entire purpose of being a gaming system.

"They're optimised for cost".

"$599 U.S Dollars"

He means optimized for mass consumption and production...

Consoles have to sell to a large audience or else the game creators have no reason to make games for them, no money to be made.

Yes $600 seems like a lot, but considering what it can do (remember HD was pretty new when PS3 came out, it was ahead of the game and has a much longer lifespan [Xbox just slightly below], also Sony started off selling the PS3 at a loss, they were concerned about price) $600 is a steal for games of that fidelity and size.

Yes they are gaming machines but he meant they are not top-of-the-line game running monsters, which they could be if it weren't for the cost restriction.

Ok, since everyone seems to have forgotten I'll repeat what I said earlier. Someone from Epic stated that at the time they showed us the demo is wasn't optimized, and once it is it would be able to run on hardware about half as powerful.

... nope

They mean hardware will eventually be able to run two of those at once (well twice the effects at least)... but not one of those with older hardware, Epic can be a little... sloppy.

Edited by SleepyGuyy, 26 February 2012 - 06:32 AM.

#38

Posted 26 February 2012 - 09:57 AM

He means optimized for mass consumption and production...

Consoles have to sell to a large audience or else the game creators have no reason to make games for them, no money to be made.

Yes $600 seems like a lot, but considering what it can do (remember HD was pretty new when PS3 came out, it was ahead of the game and has a much longer lifespan [Xbox just slightly below], also Sony started off selling the PS3 at a loss, they were concerned about price) $600 is a steal for games of that fidelity and size.

Yes they are gaming machines but he meant they are not top-of-the-line game running monsters, which they could be if it weren't for the cost restriction.

^This, $600, while unusually expensive for a console, is a steal for a capable PC, hence why so many people were eager to run Linux on a PS3.

#39

Posted 02 March 2012 - 08:09 PM

#40

Posted 03 March 2012 - 05:03 AM

OP: Your figures are very wrong and based on speculation that is also very wrong.

If the Wii U does have a chip with similar HP to the 4870 then that would make it some 5 times faster. Also there is little chance that MS will use a 6670 in the next Xbox. The recently released 7770 would cost MS about $30 per chip and be 70% faster than a 6670- there is no way that a company with unlimited cash is going to go cheap on a GPU. Expect the next gen Xbox to use something similar to a 7850 which will be a a little slower than the current 6950.

Finally... it's unlikely that the Wii U will be as powerful as a 4870 due to the amount of heat it and a CPU required to run it would require. One of the only bits of solid info that we were given is that the CPU would be 45nm which is very very old tech, Intel were on 45nm 5 years ago. In most likely it will be 50% faster than the current Xbox.

OP: Your figures are very wrong and based on speculation that is also very wrong.

If the Wii U does have a chip with similar HP to the 4870 then that would make it some 5 times faster. Also there is little chance that MS will use a 6670 in the next Xbox. The recently released 7770 would cost MS about $30 per chip and be 70% faster than a 6670- there is no way that a company with unlimited cash is going to go cheap on a GPU. Expect the next gen Xbox to use something similar to a 7850 which will be a a little slower than the current 6950.

Finally... it's unlikely that the Wii U will be as powerful as a 4870 due to the amount of heat it and a CPU required to run it would require. One of the only bits of solid info that we were given is that the CPU would be 45nm which is very very old tech, Intel were on 45nm 5 years ago. In most likely it will be 50% faster than the current Xbox.

45mn is tech that AMD was using around 2009. Intel doesn't make video cards (or at least good ones) so who cares about them. But Nintendo started out with a 4870 -- that doesn't mean thats what they are using/ I said this before, I'll say it again. THAT CARD is the BASIS for the Evergreen Series, which is the HD5xxx Series of Radeon cards. So assuming work started around 2008 - 2009, they probably did the logical thing and as the 4870 evolved into a better, more energy efficient card -- so did the Wii U's GPU.

And you're forgetting that Nintendo's console is very long and has a lot of vents.

We can only assume the the other side probably has the same amount of vents. We know the back has a big one. A company doesn't give a lof of space for hardware that is low heat. That would be stupid. They give a lot of room and vents to hardware that is high heat and needs to stay cool.

- BazzDropperz likes this

1 user(s) are reading this topic

0 members, 1 guests, 0 anonymous users